Overview

Often, family members and friends of an individual with hearing loss must learn sign language to be able to interpret and communicate. The learning process may be time consuming and there could be times where they may misinterpret what the individual is trying to convey.

This project aims to create an application that helps bridge ASL users with English-speaking users by using machine learning to translate the sign language to English alphabets and simple words in real-time speed.

Project Components

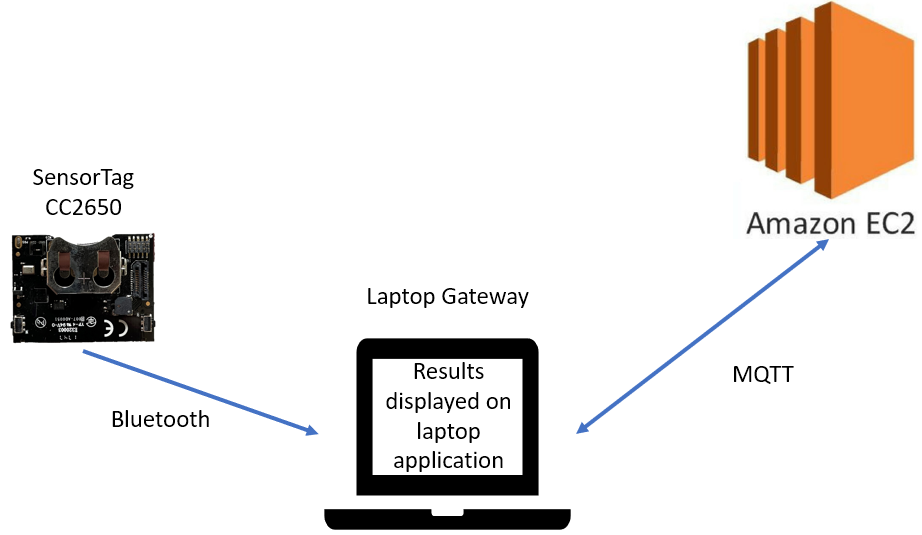

(System Architecture)

- SensorTag CC2650: Accelerometer and gyroscope sensor

- Laptop camera: To capture hand signs in real time

- AWS EC2: To host our trained model in the cloud for image processing

The SensorTag will periodically track the user's hand movement and send the data over to the gateway via Bluetooth. The gateway will then transmit the data over to the cloud for processing via MQTT. Once the classification is completed, the results will be transmitted back from the cloud to the application via MQTT and the results would be displayed on the screen in real time.

Proof of Concept

Attached is a video recording of the actual demonstration.